We present a style of sensor attacks against cameras that is called GhostImage attacks, where we use a projector to shine attack patterns into a camera in the areas of ghosts on the image (hence the name!). What's more, these attack patterns are specially crafted, so that they will be recognized by the machine learning model in the targeted autonomous system, which in turns controls it's actuation.

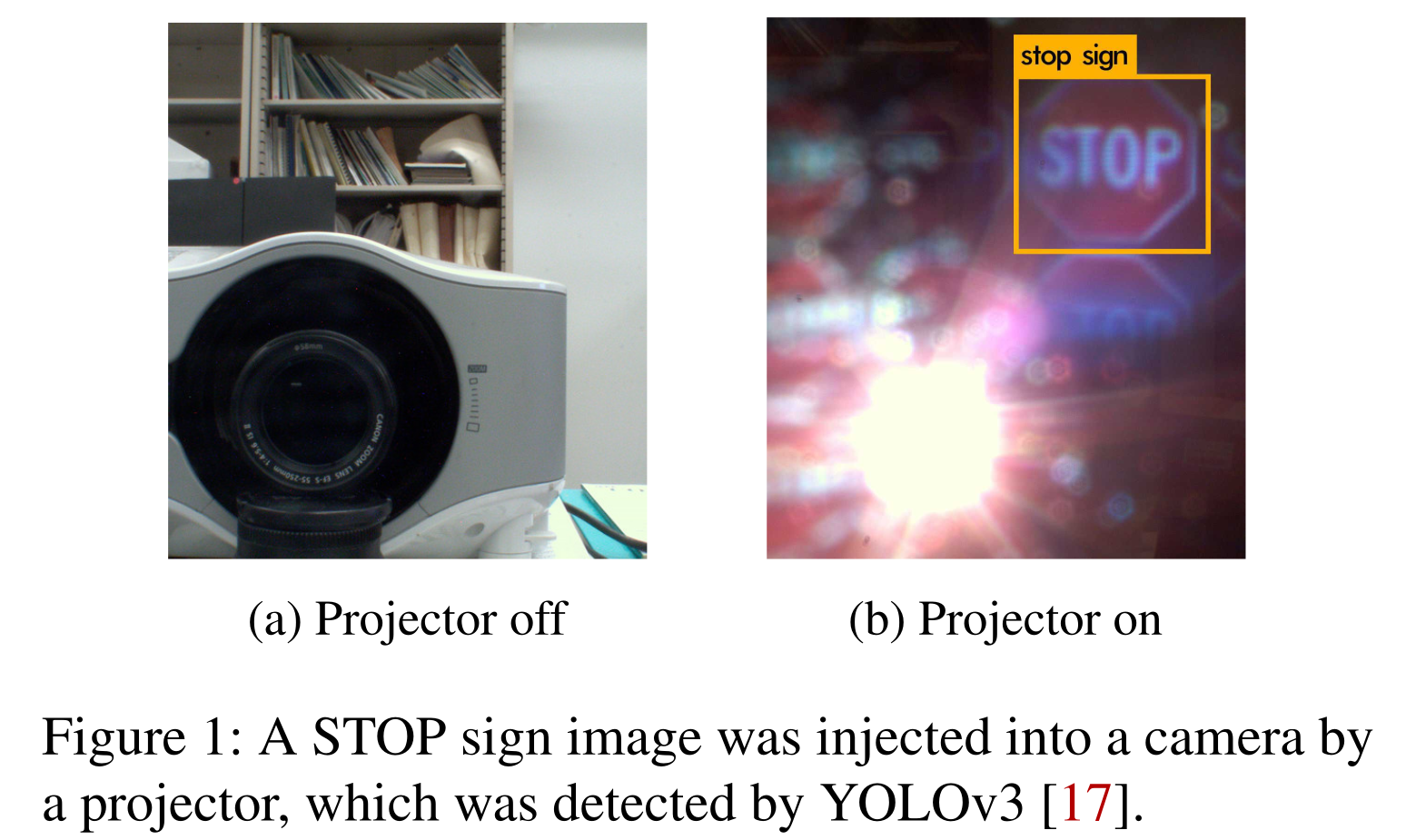

See the following two images that are taken by the victim camera. On the left when the projector was off, we can see that the projector is facing at the camera. As soon as the projector is turned on (the right image), and projecting an image of a STOP sign, we can clearly see a STOP sign in the image. More importanly, the STOP sign is recognized by YOLOv3.

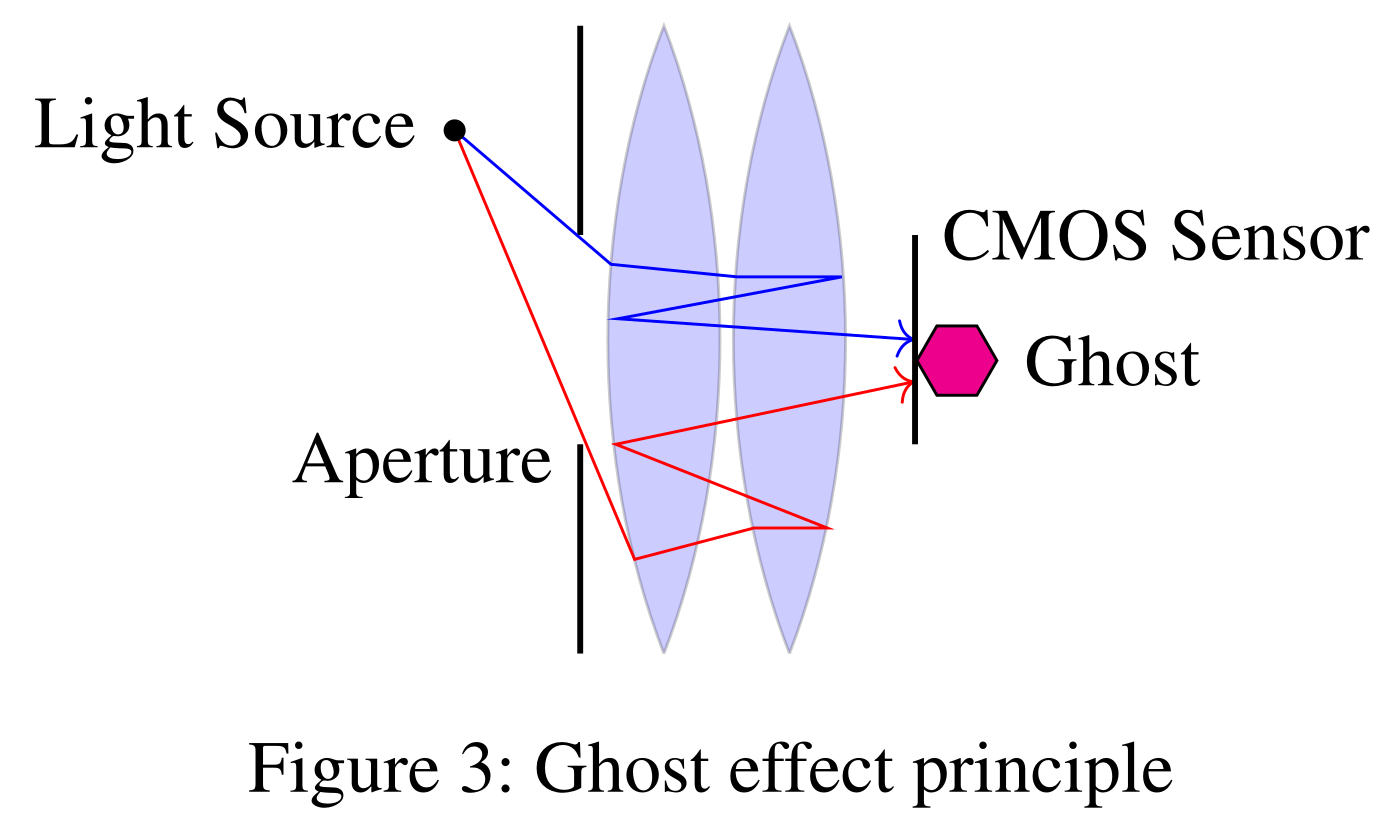

This is essentially what people know as the lens flare effect, aka, ghost effect. Due to the imperfection of lenses (e.g., reflectance), light beams coming from the light source are not only refrected, but also reflected among lenses before they reach the CMOS image sensor. Theorectically, ghosts are always there but they are normally too weak to be visible. However, when a strong light source is present, some ghosts become intense enough and end up showing on the image as polygons.

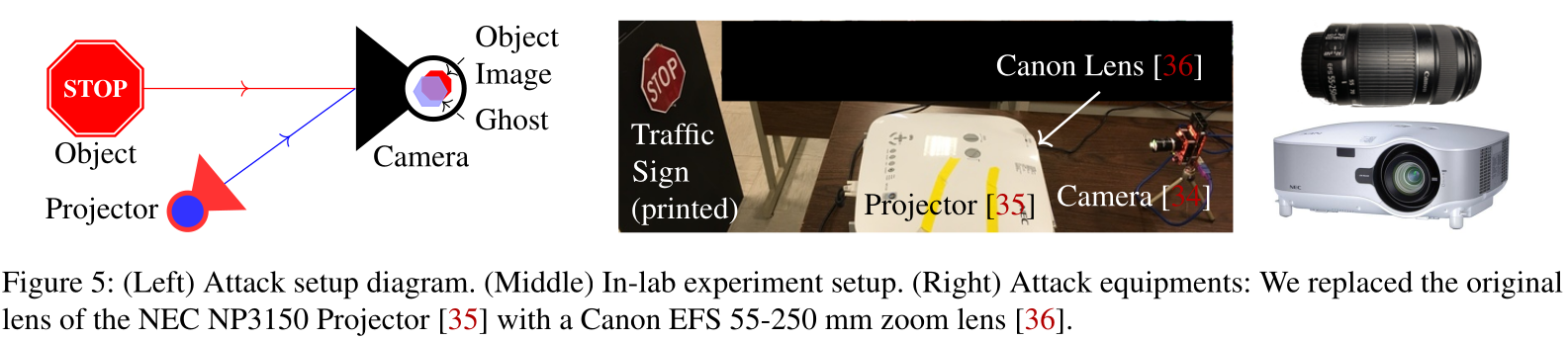

Typically, ghosts are pieces of polygons with single colors; this is because the light sources that cause these regular ghosts are single-point sources of light that have just one single color, such as light bulbs, flashlights, etc. In our experiments, however, we find out that we are able to bring patterns into ghosts due to the fact that a projector is a multiple-point source of light that is able to shine light beams in different, arbitrary colors. When the pixel density of the projection is high enough, multiple light beams in different colors (get reflected among lenses and then) go into the same ghost, therefore we are able to see the STOP sign in the above image. In fact, we exchange the factory lens of our projector with a Canon zoom lens that was design for Canon DSLR cameras to further increase the pixel density (PPI) of the projection.

This is serious because sensor attacks in general are able to alter data at the source, thus bypassing traditional cyber security protection, such as cryto-based authentication and integrity verification. When such false data are used by automated decision making AI of an autonomous system such as an self-driving car, or a surveillance system, severe consequences may occur.

For example, the attacker can inject an image of a STOP sign into a camera of an autonomous vehicle, so that the vehicle stops on the highway (where typically there isn't STOP signs). We call this creation attacks where a non-existing object is created in the image. In a surveillance system, for another example, if an intruder is not detected (because the camera recognizes the intruder as a bicycle, i.e., alteration attack) the house may be broken-in without raising an alarm.

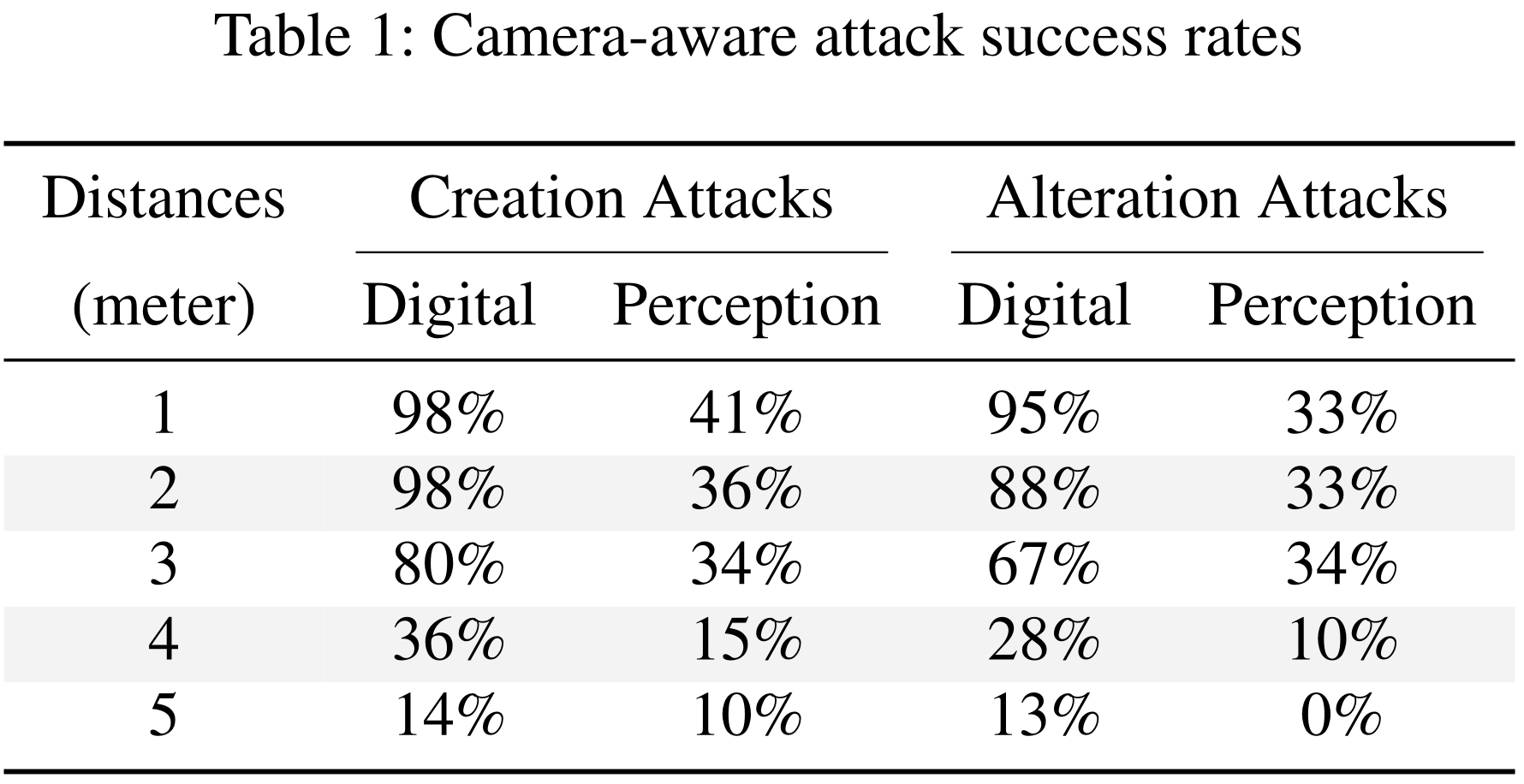

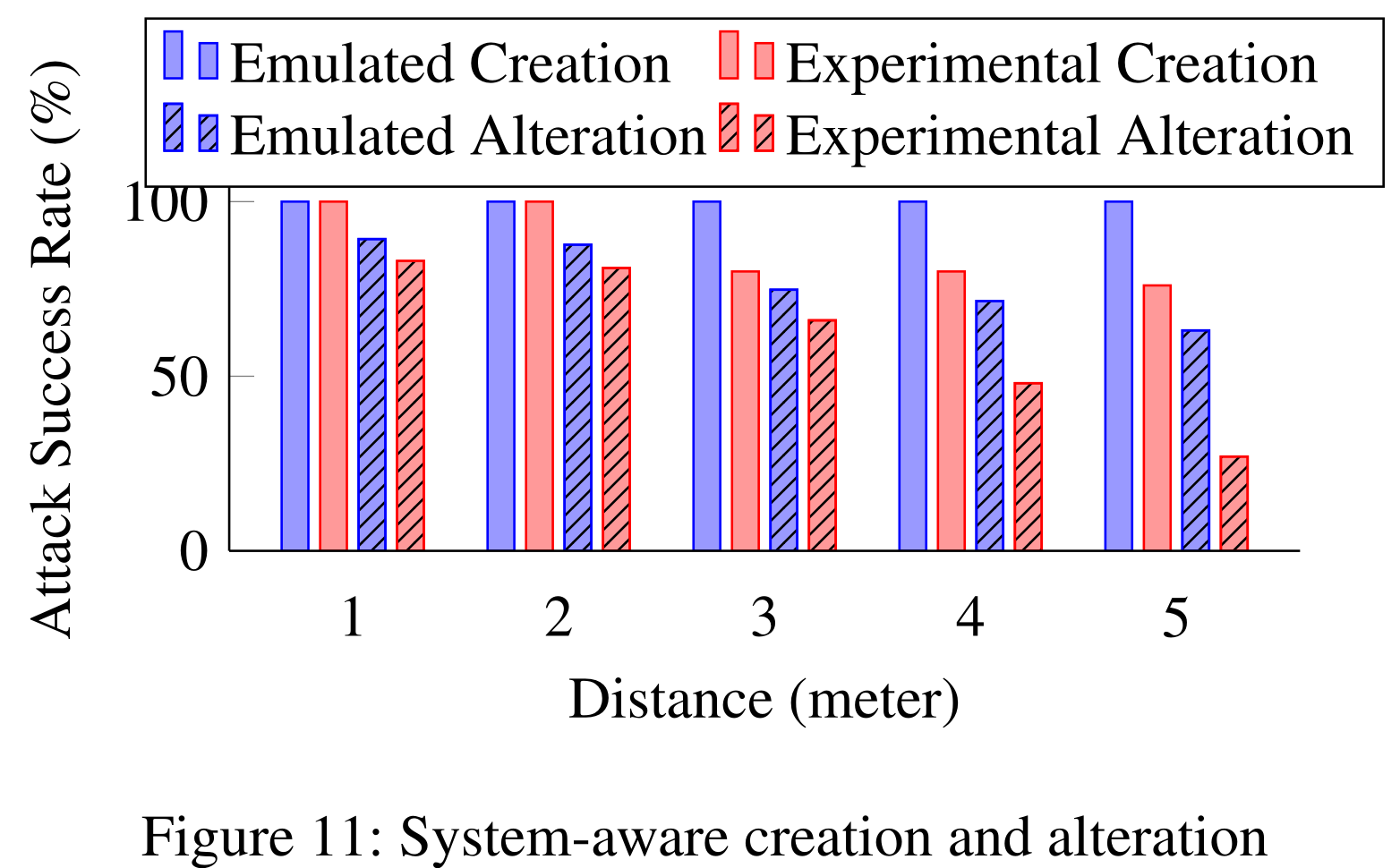

To demonstrate the attack, we trained a neural network for recognizing US traffic signs. We vary the distance between the projector and the camera. The table below shows the attack success rates. Digital results are based on simulation, while perception results are experimental.

None taken, till you said "no offense"... Indeed, long distances didn't yield good results. This is because in long distances, the pixel resolution in the ghost is too low to be recognized by the classifier. Additionally, the channel between the projector and the camera contains randomness and distortion, which further downgrades the attack success rates.

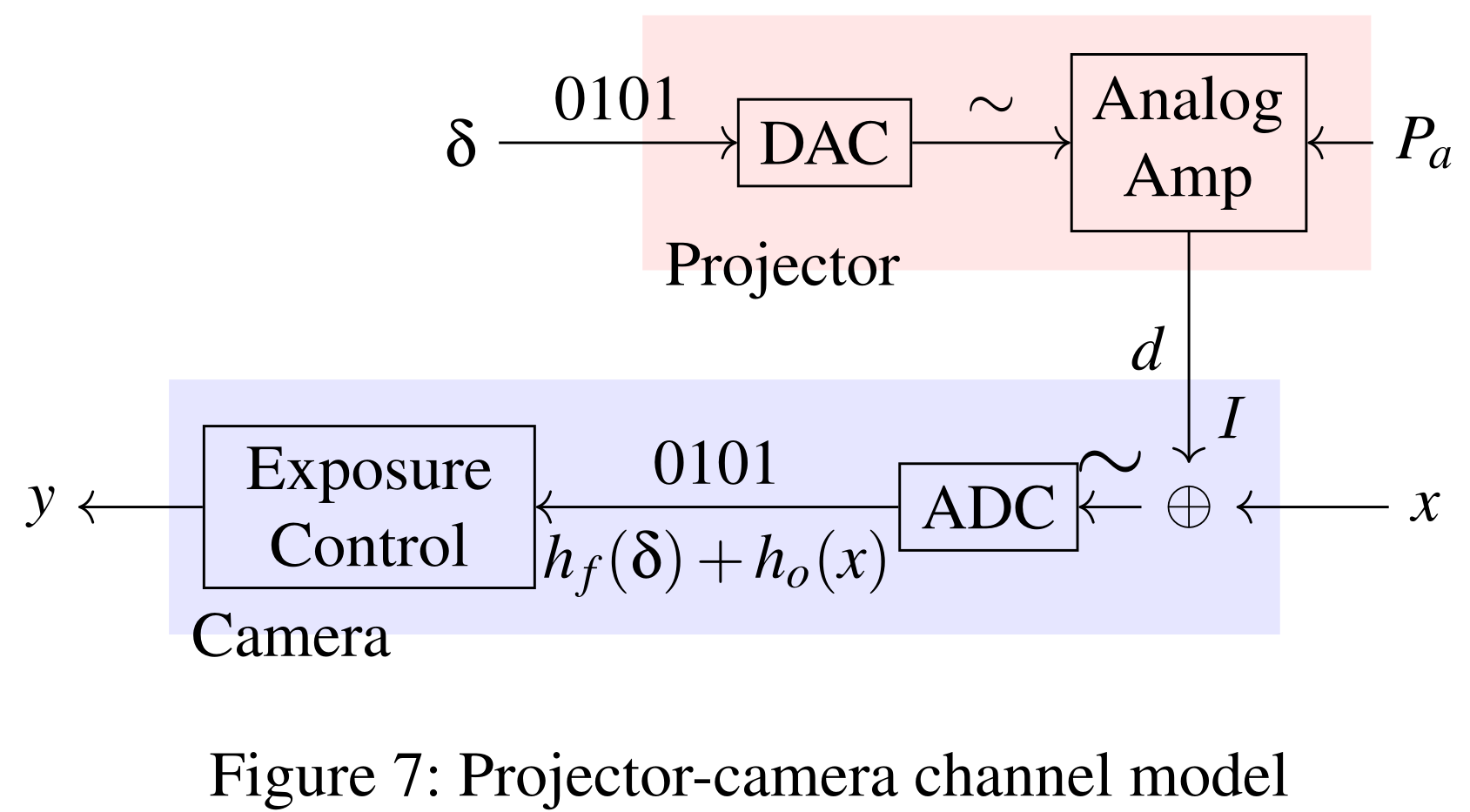

Yes, we take into account the channel effects (including color distortion and auto-exposure control), and also take advantage of the non-robustness of the neural networks. Specifically, the channel model is able to mimic the channel effects in an end-to-end fashion, such that when given an input image, the model can output the image that is as if the image is projected by the projector and then captured by the camera. We find the optimal adversarial ghost patterns by solving an optimization problem where the channel model is embedded.

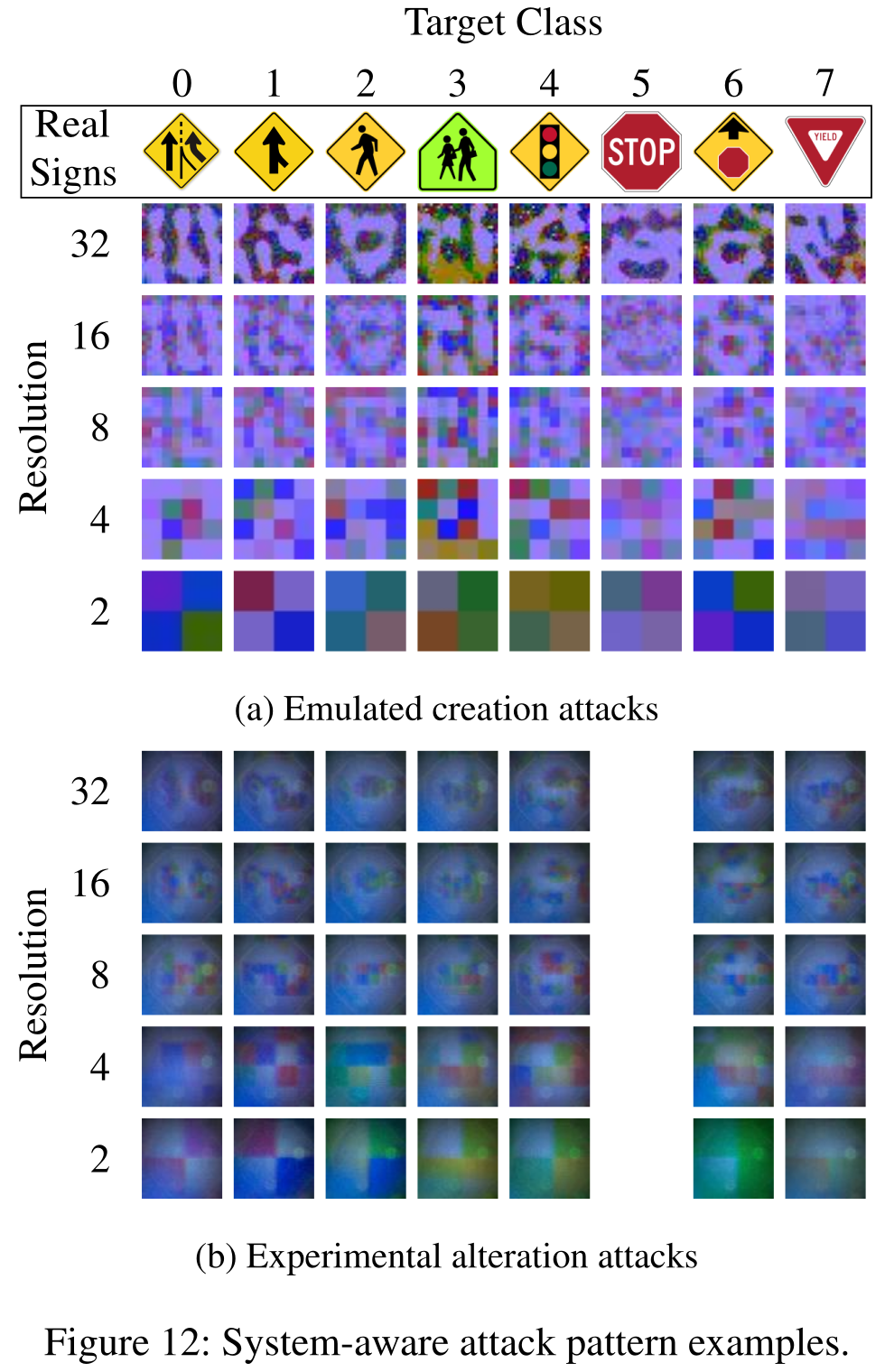

Pretty well. See the bar plots below, and also some examples of the optimal adversarial ghost patterns.

Thanks! This work is accepted RAID 2020. The video of the coference presentation can be found here.

You can cite the paper as follows. Also, the project is open-sourced.

@inproceedings{man2020ghostimage,

title={GhostImage: Remote Perception Domain Attacks against Camera-based Image Classification Systems},

author={Man, Yanmao and Li, Ming and Gerdes, Ryan},

booktitle={Proceedings of the 23rd International Symposium on Research in Attacks, Intrusions and Defenses (USENIX RAID 2020)},

year={2020}

}